Among the young large language models (LLM), LLaMA and Alpaca AI are making their mark, but do you know how one fare against the other?

Despite the difference, both LLMs utilize the same technology and parameters, with slightly different applications.

Read on to learn the differences between the two LLMs and how to take their advantage.

Table of Contents Show

What Is LLaMA AI?

LLaMA, short for ‘Low-Latency and Memory-Efficient Large-Scale Model Attention,’ is a state-of-art foundational LLM designed by researchers at Meta and released on Feb 24, 2023.

A relatively smaller model compared to GPT-4, LLaMA is designed for the same purpose of answering and solving users’ various queries, but mainly within Facebook and related applications.

It is a machine learning framework designed to accelerate large-scale deep learning models on commodity hardware so that the application may differ slightly from ChatGPT.

The LLaMA collection of language models ranges from 7 to 65 billion parameters in size, making it one of the most comprehensive language models.

Watch the video to learn more about LLaMA,

Here is what LLaMA makes it unique.

- Low Latency: It is optimized for low-latency processing, making it well-suited for real-time applications that require fast response times.

- Scalability: It particularly scales up to large models and datasets, making it suitable for processing massive amounts of data.

- Memory Efficiency: It minimizes memory usage, allowing running the application with limited memory resources.

- Modular Design: It is modular and flexible, with a set of building blocks that can be combined to create custom models for specific tasks.

- Compatibility: It is compatible with deep learning frameworks such as TensorFlow, allowing developers to integrate it into their existing workflows.

What Is Alpaca AI?

Alpaca is a programming language developed by researchers at Stanford University, first pitched in 2018 by the Stanford University team.

The LLM model is designed to make it easier to generate and verify complex algorithms for machine learning and other applications.

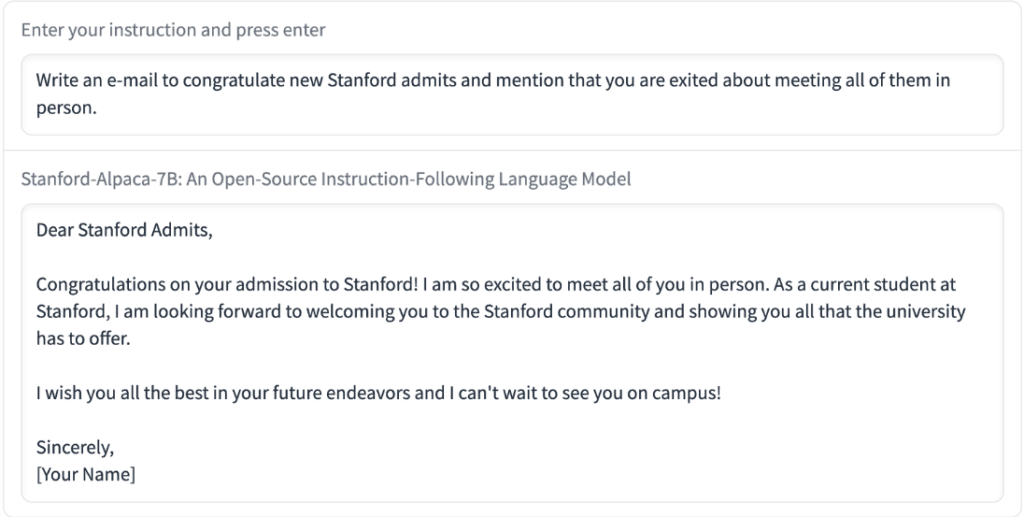

Like LLaMA and GPT, Alpaca can be applied to machine learning models to generate concise and informative answers.

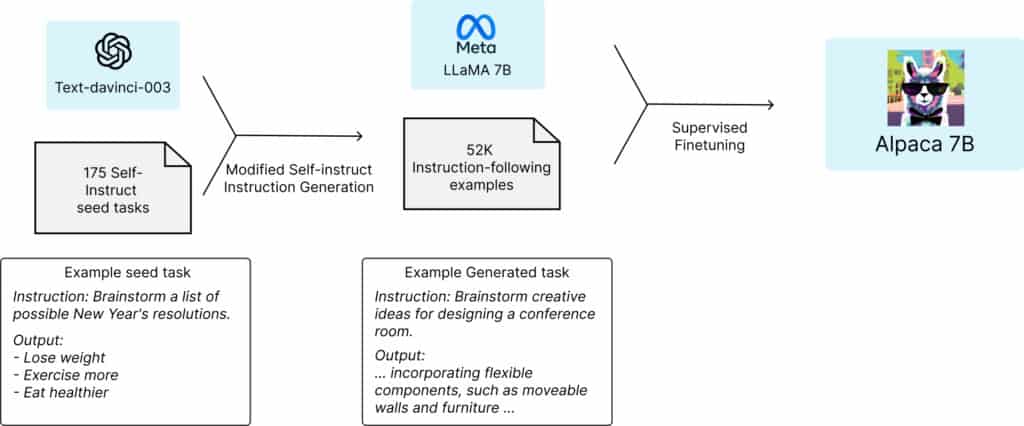

If you are wondering, Alpaca is a fine-tuned version of LLaMA’s 7B model and instruction data from OpenAI.

Here is what makes Alpaca AI unique.

- Automated Program Verification: It supports automatic program verification using techniques from formal verification, allowing programmers to verify that their algorithms meet particular safety and correctness properties to reduce the risk of bugs and vulnerabilities in the software.

- Deep Learning Framework: It is compatible with deep learning frameworks such as TensorFlow through its Python (language) interoperability, allowing users to integrate their Alpaca models with TensorFlow. It will enable Alpaca models to be trained and evaluated using TensorFlow’s data loading, training, and evaluation infrastructure.

LLaMA Vs Alpaca AI – Similarities

You know these two are natural language models, but there are some stark similarities between the two that you should know about.

1. Performance And Efficiency

Both LLMs improve the performance and efficiency of machine learning algorithms.

LLaMA achieves this goal through its use of tensor algebra and optimization techniques.

Alpaca, on the other hand, provides a concise and expressive language that supports multiple programming paradigms.

2. Compatibility With Deep Learning Libraries

Both are compatible with popular deep-learning libraries and platforms.

This particular feature makes it accessible to developers, allowing quick integration with their machine-learning models.

3. Automated Program Verification

They provide support for automated program verification to ensure the correctness and safety of machine learning algorithms.

LLaMA’s verification system, called ‘Laps,’ can automatically check for type consistency, shape consistency, and other properties of tensor expressions.

Alpaca uses techniques from formal verification to verify properties such as the absence of race conditions and the correctness of numerical computations.

4. Open Source And Active Communities

LLaMA and Alpaca are open-source projects boasting a community of developers and researchers actively working to improve and extend AI’s capabilities.

It mainly allows for collaboration and contributions from a wide range of developers worldwide, leading to quicker development.

5. Ongoing Development

They are both foundational LLM models and are still in active development, with ongoing efforts to improve their performance, usability, and functionality.

LLaMA Vs Alpaca: Major Differences

Despite being a similar technology, LLaMA and Alpaca have somewhat different purposes and intentions.

Here are some fundamental differences that separate the two LLM models.

1. AI Purpose

LLaMA is a low-level tensor algebra language that provides a powerful and efficient way to express and optimize machine learning computations.

Hence, its application may be more limited, but Alpaca is slightly more helpful.

It is a high-level programming language that allows for rapid development and prototyping of machine learning models to generate usable chatbots.

2. Significant Size

LLaMA is more significant in size and parameters than Alpaca.

It offers four different-sized parameters (7B, 13B, 33B, and 65B), where developers can choose which to implement.

Alpaca is more of a fine-tuned model of LLaMA’s 7B, but it makes it more accessible to use and implement.

3. Syntax And Paradigm

LLaMA’s syntax is based on a mathematical notation that can be unfamiliar to many developers and primarily uses imperative programming.

On the other hand, Alpaca’s syntax is designed to be more accessible and familiar to those who are used to programming in languages like Python or MATLAB.

It also supports both imperative and declarative programming.

4. Verification Techniques

While both LLaMA and Alpaca support automated program verification, they use different techniques to achieve this goal.

LLaMA’s verification system uses type checking and static analysis to ensure that tensor expressions are well-formed and can be efficiently executed.

Alpaca’s verification system uses formal methods to verify the correctness and safety of machine learning models.

5. Development Stage

LLaMA is a relatively new project and does not yet have all the features and functionality of more mature frameworks like OpenAI’s GPT.

On the other hand, Alpaca has been in development for several years and has a more mature and stable codebase.

Final Thoughts

LLaMA and Alpaca offer improved performance, verification, and customization, enhancing machine learning algorithm development, efficiency, and reliability.

Each framework offers unique advantages that can impact the development, performance, and reliability of machine learning algorithms differently.

However, these technologies are not open for adoption, especially LLaMA. Consider requesting access to begin.

Frequently Asked Questions

Can Developers Adopt LLaMA And Alpaca For Integration?

Yes, but they come with limitations. Using LLaMA requires permission from Meta, while Alpaca is not available for commercial use but for educational purposes.

Why Is Alpaca Not Available For Commercial Use?

There are three main factors for Alpaca not available for commercial use;

- It is based on LLama, which has a non-commercial license.

- The instruction data is based on OpenAI’s text-DaVinci-003, which is not available for developing models that compete with OpenAI.

- It lacks adequate safety measures appropriate for generic users.