If you are looking for a reliable way to generate images from text prompts, you might have heard of Stable Diffusion.

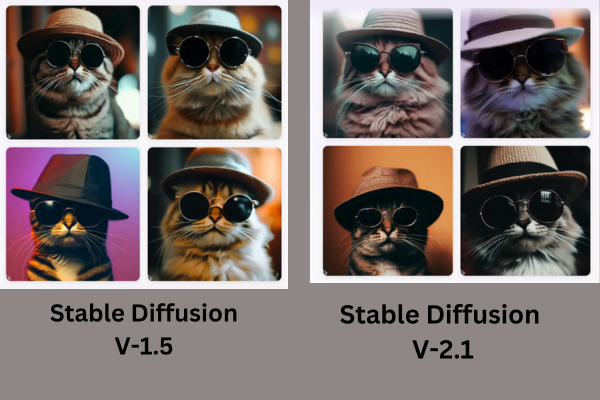

But what are the differences and similarities between Stable Diffusion 1.5 and 2.1, and which one should you use for your creative projects?

This article will compare these two models’ features, performance, and limitations and help you decide which is better for your artistic needs.

Table of Contents Show

What Are The Features Of Stable Diffusion 1.5?

Stable Diffusion version 1.5 is a text-to-image generation model that uses latent Diffusion to create high-resolution images from text prompts.

It was released in Oct 2022 by a partner of Stability AI named Runway Ml. This model uses a fixed pre-trained text-encoder CLIP ViT-L/14.

Stable Diffusion 1.5 is a latent Diffusion model which combines the autoencoder with the Diffusion model. It is trained in the latent space of the autoencoder.

Images are encoded via an encoder that turns images into latent representations.

Similarly, latent representation is used to guide the Diffusion process. It is a technique used to generate images by iteratively de-noising data.

Here are some interesting features of Stable Diffusion version 1.5;

- It can generate high-resolution images from any text prompt.

- It uses a pre-trained text encoder that understands natural language.

- It uses a latent variable that is computed from the noisy image.

- It can improve classifier-free guidance sampling by dropping the text-conditioning during training.

- It can be used with the Diffusers library or the RunwayML GitHub repository.

What Are The Features Of Stable Diffusion 2.1?

Stable Diffusion V-2.1 is a high-resolution image synthesis model that uses latent Diffusion and an OpenCLIP (Contrastive Language-Image Pre-Training) text encoder.

It was released in December 2022 by Stability AI. You can find some of the features of Stable Diffusion V-2.1.

- It supports negative and weighted prompts allowing a user to control the image synthesis.

- It can effectively provide natural scenery, people and pop culture.

- It also provides non-standard resolutions and extreme aspect ratios.

- It can combine with other models, such as KARLO.

- It can perform image variations and mixing operations.

Stable Diffusion 1.5 Vs. 2.1 – Similarities

Stable Diffusion uses a deep generative neural network that denoises random noise into text-encoder-guided images.

You can find some similarities between Stable Diffusion V-1.5 and V-2.1 below.

1. Purpose And Objective

Stable Diffusion can generate detailed images based on text prompts.

It can modify existing images or fill in the missing details on the image with the help of a prompt.

Stable Diffusion V-1.5 and V-2.1 are two versions of the same text-to-image model that can generate high-quality images and empower people to create stunning art.

Both versions aim to empower billions of people to produce stunning art within seconds.

The objectives of both versions are to generate high-quality images with a latent Diffusion model.

2. Architecture And Parameters

Stable Diffusion version-1.5 and 2.1 are based on the same number of parameters and architecture. The architecture is based on a u-Net with 32 residual blocks.

They use the latent Diffusion model architecture developed by the CompVis group at LMU Munich.

They also use the CLIP text encoder pre-trained on the attention mechanism.

3. Image Resolution

Both of the models can generate images at 512 x 512 resolution. However, Stable Diffusion V-2.1 can generate a larger and more detailed image at 768 x 768 pixels.

This means V-2.1 requires more computational resources and time to generate images.

Stable Diffusion 1.5 Vs. 2.1 – Major Differences

Stable Diffusion has different versions, such as v-1.5 and v-2.1, that differ in text encoder, resolution and training data. They both are trained on different datasets.

Moreover, Stable version 2.1 is an improvement over version 1.5 in terms of the generated images’ quality, diversity and stability.

Refer to the table below for some main differences between stable Diffusion 1.5 and 2.1.

| Aspect | Stable Diffusion V-1.5 | Stable Diffusion V-2.1 |

|---|---|---|

| Text Encoder | CLIP | OpenCLIP |

| Image Resolution | 512 x 512 pixels | 768 x 768 pixels |

| Dataset Filtering | More restrictive for adult content | Less filtered for adult content |

| Negative and weighted prompts | Not supported | Supported |

| Non-standard resolution and aspect ration | Not Supported | Supported |

| Diversity and realism of images | Lower for people and pop culture | Higher for people and architecture, interior design, wildlife etc. |

Now, let’s dive deeper into the significant differences between Stable Diffusion 1.5 and 2.1.

1. Text Encoder

Stable Diffusion version-1.5 uses CLIP ViT-L/14 as a text encoder.

While Stable Diffusion version-2.1 uses OpenCLIP-ViT/H as a text encoder.

OpenCLIP is a new text encoder developed by LAION (Large Scale Artificial Intelligence) that gives a deeper range of expression than CLIP.

2. Resolution Of Images

Stable Diffusion V-1.5 supports 512 x 512 resolution images.

However, Stable Diffusion V-2.1 supports higher resolution images of 768 x 768 based on SD2.1-768, which are twice the area of the former.

This model allows image variation and mixing operations described in Hierarchical Text-Conditional Image Generation With CLIP Latent.

This means Stable Diffusion V-2.1 can generate larger, more detailed images than V-1.5, capture more details and nuances from the prompt, and look more reliable than the original description.

3. Training Data

Stable Diffusion V-1.5 was trained on a dataset called lion-aesthetics V2 5+, filtered for adult content using LAION’s NSFW (Not Safe For Work) filter.

This model is good at generating architecture, interior design and so on. However, they are not good at generating people and pop-culture images.

On the other hand, Stable Diffusion V-2.1 was fine-tuned on a less filtered dataset for inappropriate or adult content.

This model improved the ability to generate people and pop-culture images.

Additionally, the Stable Diffusion 2.1 version supports non-standard and negative and weighted prompts allowing users to tell what not to generate.

This means Stable Diffusion V-2.1 gives a deeper range of expression and more control over the image synthesis.

Regardless Stable Diffusion V-1.5 does not support a negative prompt.

4. Stability

Stable Diffusion V-1.5 is more suitable for generating images of people and pop culture.

While Stable Diffusion V-2.1 is suitable for generating images of architecture, interior design and other landscape scenes.

The Stable Diffusion V-2.1 comes in two variants: Stable unCLIP-L and Stable unCLIP-H, conditioned on CLIP ViT-L and CLIP ViT-H image embeddings.

You can try a demo of the Stable unCLIP model on the web.

This version also improves anatomy and supports a range of art styles than version 1.5.

The Botton Line

Stable Diffusion versions 1.5 and 2.1 can generate high-quality images while choosing between them depends on your preferences and use cases.

If you want to generate more realistic, diverse and stable images, you can try V-2.1. However, if you want to generate images more specific to certain celebrities or styles, you can try V-1.5.

Moreover, you can even try both versions and compare the results yourself.