No wonder ChatGPT is taking the world by storm, but do you know it took three generations and massive fine-tuning to reach where it is today?

You must probably be curious to learn how does ChatGPT work.

In fact, it has diverse business and general applications in daily human lives, which you should know about.

Continue reading to find out how ChatGPT works and the truth behind its model.

Table of Contents Show

How Was ChatGPT Built?

Few innovations garner such recognition and interest as ChatGPT has achieved in such a short duration.

But, how much do you know about this AI chatbot? Let us start by unraveling the mystery that ChatGPT is and how ChatGPT works.

GPT and GPT-2

ChatGPT is older than you may imagine.

The first generation of OpenAI chatbots powered by GPT (Generative Pre-trained transformers) started in 2018 as a language model by OpenAI.

Not long before OpenAI released GPT-2, an unsupervised transformer language model in 2019 that generated human-like text with a high degree of coherence and contextually.

However, it was controversially not released entirely due to concerns over its potential misuse in generating fake news or impersonating individuals.

Today, only a small version of the model is available for research and development.

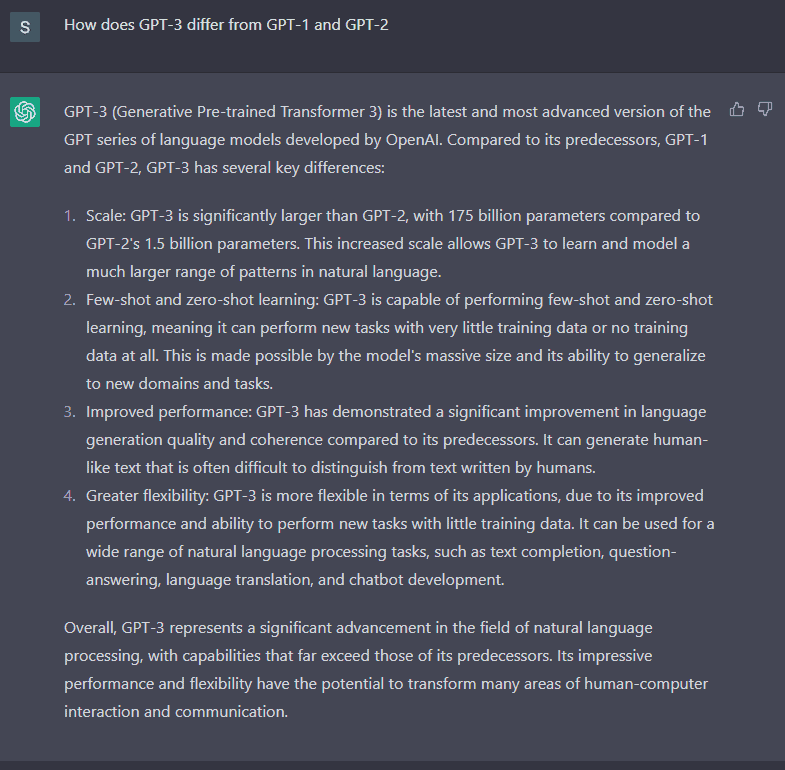

GPT-3 (ChatGPT)

First released as a Beta version in 2020, GPT-3 did not make a wide public appearance until the end of 2022 when it released ChatGPT.

Unlike its predecessor, it boasts 175 billion parameters and is robustly trained on a large data set.

This training process involved pre-training the model on a massive amount of text data, such as books, articles, and websites, to help it learn the patterns and structures of human language.

Did you know ChatGPT’s responses are so human-like that it even fooled the turning test into believing it is a human?

Today, free and paid chatbot versions are available for businesses and individuals and are used for various daily applications.

Sooner, the GPT-3 model will be replaced by GPT-3.5 with more robust learning and parameters to answer your queries more accurately.

How Was ChatGPT Trained?

ChatGPT underwent rigorous training on massive amounts of unlabeled data and fine-tuning before it was launched for public use.

It is basically trained using a two-stage training process.

| Pre-training | Fine-tuning |

|---|---|

| The first stage involved pre-training the model on a large corpus of unlabeled text data using a technique called unsupervised learning. | Later fine-tuned on a smaller dataset of conversational data, it learned how to generate human-like responses in a conversational context. |

Combining the two allows ChatGPT to leverage the knowledge it learned during the pre-training stage.

It allows for generating high-quality responses in a conversational context.

1. Pre-Training Stage

It was performed on a massive dataset of diverse text sources, such as books, articles, and websites.

The training helps predict the next word or sequence of words in a given text passage based on the preceding terms.

The “language modeling process” is critical to enable the model to learn the patterns and structures of human language.

Moreover, it helps the chatbot develop a general understanding of the world.

2. Fine-tuning

Fine-tuning involves pre-training the model on a smaller dataset of conversational data, such as chat logs or customer service interactions.

It allows the model to learn to generate natural and contextually appropriate responses in an everyday setting.

The fine-tuning process is no different than training, but it specifically focuses on optimizing the model’s performance in generating human-like responses.

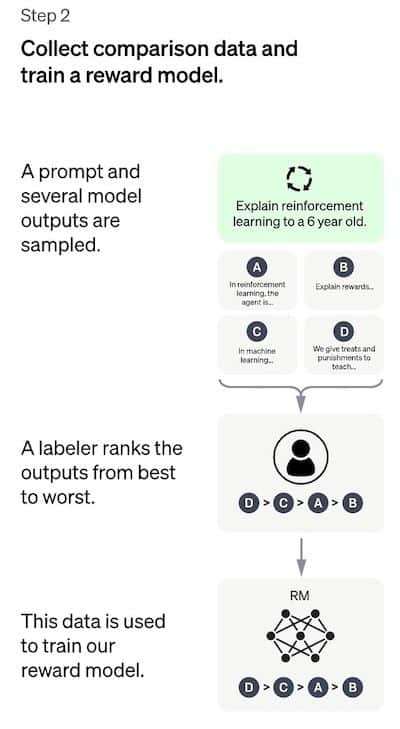

As a part of fine-tuning, OpenAi combines Supervised Learning and Reinforcement Learning to make ChatGPT more effective.

| Supervised Learning | Reinforced Learning |

|---|---|

| A part of machine learning where a computer algorithm learns to map queries to responses based on labeled examples provided during the training | A part of machine learning enables algorithm to learn to make decisions based on the feedback it receives in the environment such as chatbot |

| A mapping function that makes predictions on new, unseen input data to create unique or follow-up response for subsequent questions | The system interacts with an environment and receives feedback in the form of rewards or penalties for each action it takes |

| It is applied for speech recognition, natural language processing, fraud detection, and recommendations | The well-defined the reward is, the better it can perform in the environment |

Furthermore, the reward model is used as a language model score that evaluates the quality of its responses.

The model scores measure the probability of a given response.

The higher the language model score, the more fluent and coherent the responses are.

How Does ChatGPT Work?

As previously mentioned, OpenAI combines supervised and reinforcement learning to fine-tune ChatGPT’s responses.

Therefore, it can answer almost any topic with the same finesse despite its varying complexities.

For example, it can generate a working API or a basic software framework on any programming language from scratch and write songs, poems, or stories like any skilled artist.

A complex set of machine learning, natural language processing, and algorithms working together to provide apt responses.

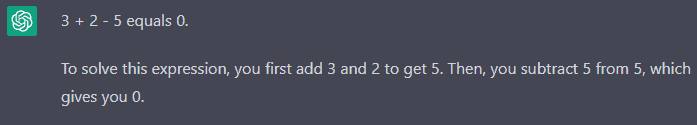

Using an example, let us look at how does ChatGPT work in pre-determined steps.

- Input Prompt: The user types in a text-based prompt, such as a question or a statement.

- Tokenization: The prompt converts into a sequence of numerical tokens, which the model can understand. For example, What is 3+2-5? = What [Token 1] is [Token 2] 3 [Token 3] + [Token 4] 2 [Token 5] – [Token 6] 5 [Token 7] ? [Token 8]

- Embedding: Each token maps to a high-dimensional vector, known as an embedding, which captures the words’ meaning and context in the prompt.

- Processing: Pass through a series of layers in the model to generate a new sequence of embedding that represents the model’s best prediction of the most likely following words.

- Decoding: The new embedding sequence then maps back to a series of words, representing the model’s final output response.

- Output Response: The model generates a response that is contextually relevant to the input prompt and sounds natural and human-like.

These steps repeat for each new prompt the user inputs, allowing the model to generate an ongoing conversation with the user.

It applies the masked language modeling approach [mask] to predict the next word to create a sentence promptly.

For example, What is your [mask]? As input, it might predict the word as “name,” “age,” “address.”

It allows the chatbot to learn the structure of human language to generate more natural and fluent text.

Watch the video to find out more about how does ChatGPT work,

Final Thoughts

ChatGPT is here to stay and become an integral part of our lives; hence, you better adopt the technology for good.

The chatbot model evolves to introduce new parameters; therefore, your queries are dealt with more tactfully.

Why not ask the ChatGPT, “how does the ChatGPT work?”

Frequently Asked Questions

How Much Time does Chatgpt Take to Answer Any Question?

The time it takes for ChatGPT to answer a question can vary depending on several factors.

These factors account for the question’s complexity, the response’s length, and the server load.

Remember, it may take longer to generate responses if there is a high demand on the server or technical issues impacting performance.

Why is ChatGPT Controversial?

The responses ChatGPT provides can sometimes be harmful, hurtful, inaccurate, or plagiarized.

Remember, it generates responses based on learning existing literature and materials.

Similarly, it encourages pupils to cheat on their assignments by doing their work for them.

It will promote cheating more often among students.

How Accurate is ChatGPT’s Response?

The input prompt’s quality, the question’s complexity, and the topic or domain being discussed will affect the ChatGPT’s response.

Remember, it is an artificial intelligence model which may not always provide accurate or reliable information.