The 7B in Pygmalion 7B represents the 7 billion parameters in the model, making it a more robust model than previous models.

It is a conversational fine-tuning model based on Meta’s LLaMA-7B.

It is a fusion of the previous dataset of 6B models, chat models and the usual Pygmalion persona.

However, you might experience some limitations and biases while using this model of Pygmalion.

Continue reading to learn how to use and access Pygmalion 7B and prompt formatting of this model.

Table of Contents Show

What is Pygmalion 7B?

Pygmalion 7B uses the model of LLaMA, fine-tuned with the dataset of the 6B model.

You can use this model mainly for chatting and role-playing purposes.

Furthermore, this model was previously a Chat model like the 6B model and later an experimental instruct model.

Features And Capabilities

Some new and unique features of the Pygmalion 7B model are mentioned below.

- No Filter Or Restrictions: No steps are taken to filter and restrict the output from this model.

- Low VRAM Requirement: It only requires 18GB or less VRAM to provide better chat capability with minimal resources.

- Fine-Tuned For RP: With its fine-tuned dataset for RP (Role-Playing), it is a fantastic RP partner.

- Free And Open-Source: You can modify/re-distribute the open-source model and code as you like.

- Frequently updated: Pygmalion is regularly updating to improve the model’s performance.

How To Use Pygmalion 7B?

Pygmalion 7B is under LLaMA’s license, which makes it non-commercial, and the models are released in XOR files.

Therefore, you cannot use and install it without combining it with the original LLaMA weights.

You can request access to the original LLaMA weights from the Meta Form.

Furthermore, after you have access to the model weights, follow the steps to install it.

Note: REQUIREMENTS

- Python 3.10

- Git

- Convert LLaMA weights to hf.py format using the converting script.

- Now, apply the XOR files by running the command below in the HuggingFace repository.

./pygmalion-7b \

./xor_encoded_files \

/path/to/hf-converted/llama-7b \

–decode

- Replace “/path/to/hf-converted/llama-7b \” with the location of the folder of the LLaMA model.

- Download the zip file of Pygmalion 7B JSONs and place it in the secure folder.

Furthermore, this process won’t work on Windows, so you must do it in the WSL2 environment.

You can create the environment by downloading “Ubuntu” and following the above process.

Prompting In Pygmalion 7B

This model uses the previous Pygmalion’s model interface and chat format.

Furthermore, you can provide the same prompt formatting as in the previous model.

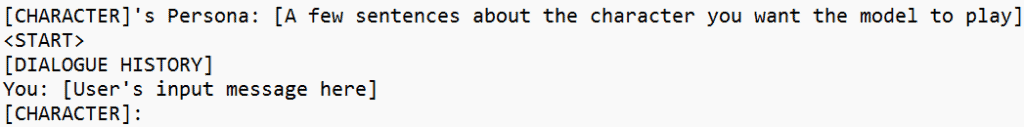

You can look at the formatting example below if you use the main model of Pygmalion 7B.

Moreover, the [CHARACTER] is the name of the Character you will be playing with and <START> is placed to separate the Character and scenario.

Here is an example of an Assistant Character in Pygmalion 7B.

<START>

Assistant: Hello! How may I help you today?

You: What is Zork?

Assistant:

Now, the model will generate the following result.

Zork is an interactive fiction computer game created in the 1970s by Infocom, Inc., which was later acquired by Activision Blizzard. It is widely considered one of the most influential games ever made and has been credited with popularizing text-based adventure games. The original version of Zork was written in the programming language MACRO-10, but it was ported to many other platforms over the years."

Limitations And Biases Of Pygmalion 7B

Their creators have mentioned a few limitations and biases of this model.

- The use-case of this model is only for fictional conversation and entertainment purposes.

- It is not fine-tuned to be safe and harmless.

- The training data in this model contains profanity and texts that can be offensive.

- The responses from the model can be socially unacceptable and undesirable texts.

- The outputs can sometimes be factually wrong and misinterpreting.

The Bottom Line

The Pygmalion 7B model is based on Meta’s LLaMA 7B, and due to issues in licensing, you have to request access before using it.

Moreover, this model has different limitations and biases, including offensive texts, profanity and factually wrong content.

Hopefully, this article will provide more insights about Pygmalion 7B.