Janitor AI is best known for creating custom characters and chatting with different AI characters conversationally.

The AI generates responses with a limit in Tokens and contexts, depending on the API platform you use in Janitor AI.

Continue reading if you are curious about the Janitor AI tokens limit, context limit and ways of bypassing the token limit.

Table of Contents Show

Does Janitor AI Have A Limit?

You can chat with the readymade characters and create custom AI characters in Janitor AI.

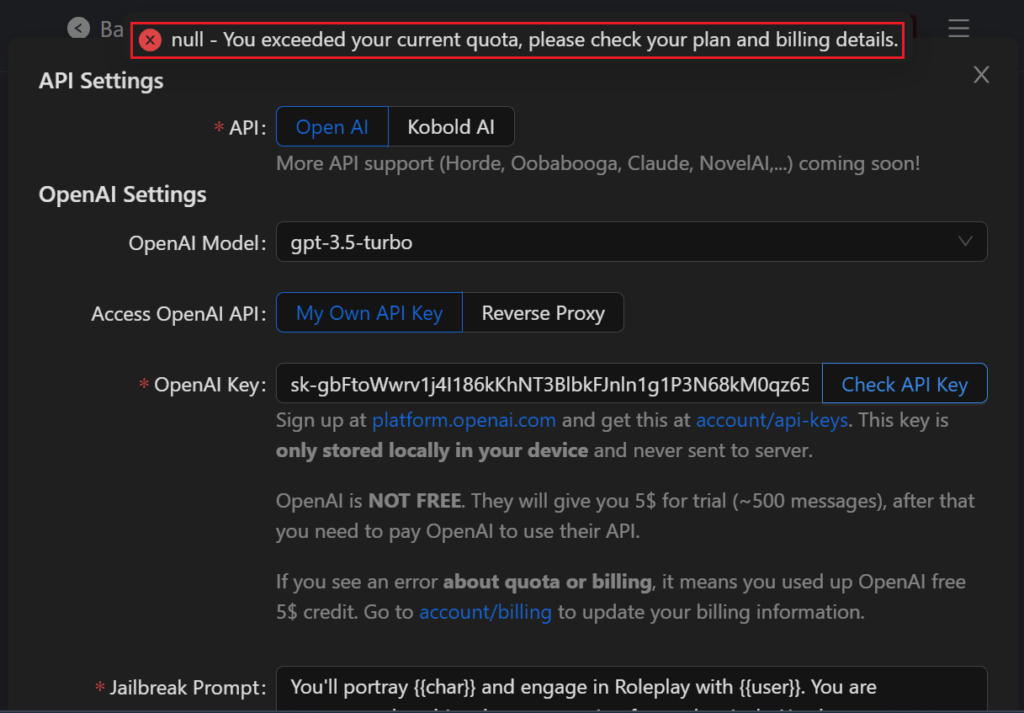

Furthermore, while chatting and creating characters, you must have encountered issues like “exceeded quota,” “type error,” and “token limit.”

All these limits and errors while generating content in Janitor AI are the token or content limit set up by the API platform.

Moreover, there is also a context limit in Janitor AI, which depends on the API platform you are using, OpenAI or Kobold AI.

Janitor AI Tokens And Context Limit

In Janitor AI, the token and context limits are two different limitations the API platform imposes.

The token limit is the maximum number of tokens the AI can generate while responding to your queries.

But these limitations are necessary for different reasons, the token limit increases speed, avoids repetition, and ensures efficient performance.

Furthermore, the context limit is required to increase the generation speed and avoid memory errors.

Moreover, you can find OpenAI and Kobold AI API platforms in Janitor AI, and both API platforms implement different token limits.

1. OpenAI API Limit

If you are using the OpenAI API, it has a token limit of 1000 and a context size of 4096 contexts in GPT 3.5 and text-davinci.

However, according to your pricing, the GPT-4 can increase the limit from 8k to 32k contexts.

Furthermore, OpenAI is not free, and you will get a free trial of $5( 500 messages); after that, you must pay according to OpenAI pricing.

2. Kobold AI Limit

The Kobold AI has a token limit of 1000 and a context size of 4096 contexts.

Unlike OpenAI, the API of Kobold AI is free, but make sure you are running the KoboldAI United version to get the API URL on your system.

Moreover, you can also rent GPU (Graphics processing unit) for 0.2$/hour to get the API URL.

How To Bypass The Janitor AI Token Limit?

There is no official method to bypass the token limit because the API platform, like OpenAI, directly implements it.

However, using the API Key, you can use the reverse proxy to access Janitor AI after the token limit exceeds.

And this method is only better if you don’t want to use the KoboldAI API, as it is free and doesn’t need any subscription to use the API URL.

Using the reverse proxy in Janitor AI, you can bypass the message and token limits and increase response time.

However, the API platform does not recommend this method, as it can be slow, unstable and provide weird results.

In addition, you can also delete some of your chats to recover the token you have used.

The Bottom Line

You can discover the token limitation of Janitor AI after chatting with the characters for a prolonged time.

The limit of tokens and context size is also necessary to increase speedy generation, avoiding memory error and repetition.

However, if you are frustrated without using the Janitor AI, you can bypass the token limit using the reverse proxy method.